📜 강의 정리

* Coursera 강의 중 Andrew Ng 교수님의 Sequence Models 강의를 공부하고 정리한 내용입니다.

* 이미지 출처 : Deeplearning.AI

Recurrent Neural Networks

1. Why Sequence Models?

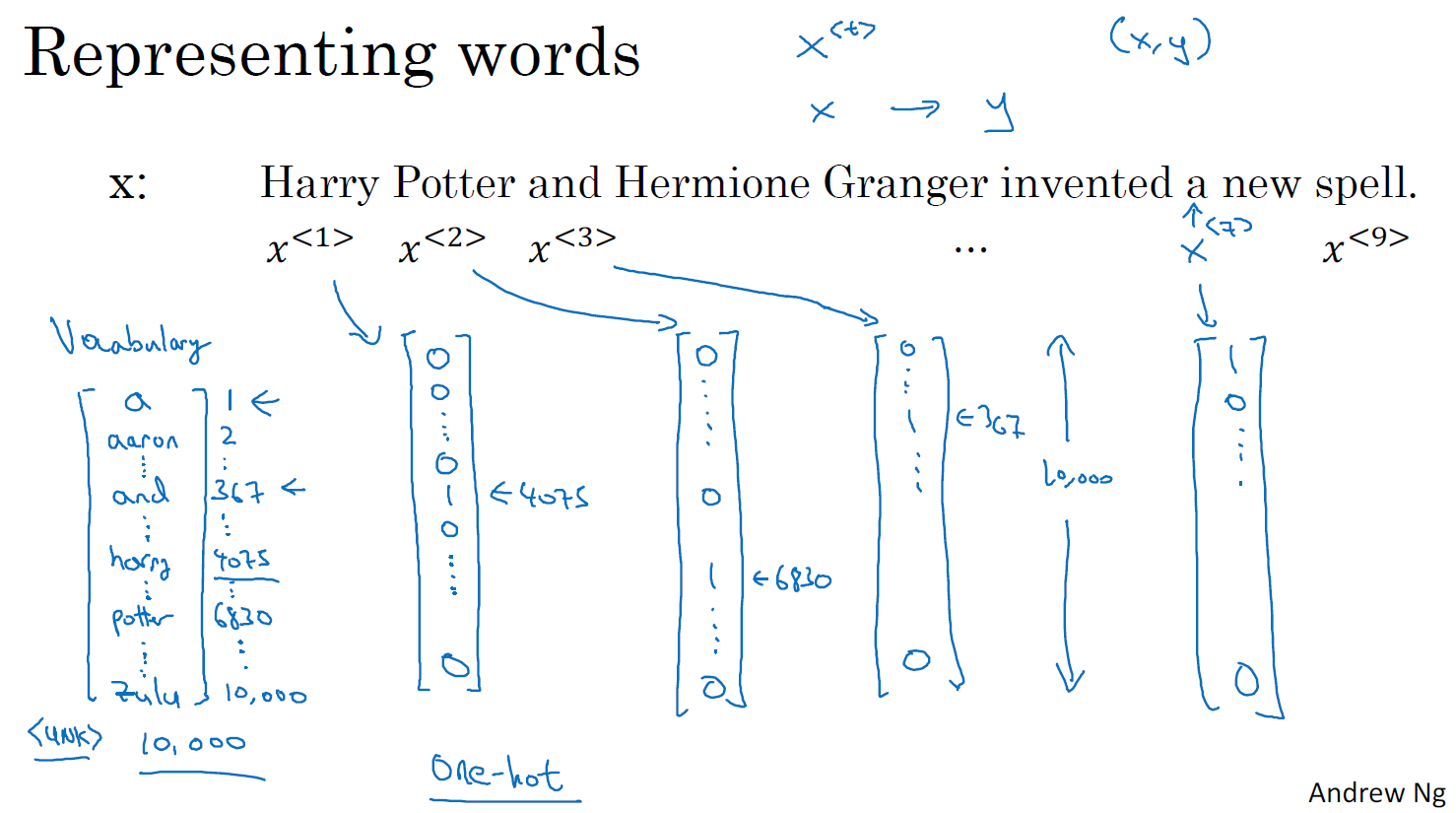

2. Notation

이전과 동일하게 i번째 example에 대해 (i)로 표현하고 x_(i)에서 시퀀스를 나타내기 위한 방법으로 <t>를 사용

10K개의 단어가 있는 사전이 있다고 할 때, 각 단어의 위치를 1만 차원 shape의 원핫 벡터로 표현

3. Recurrent Neural Network Model

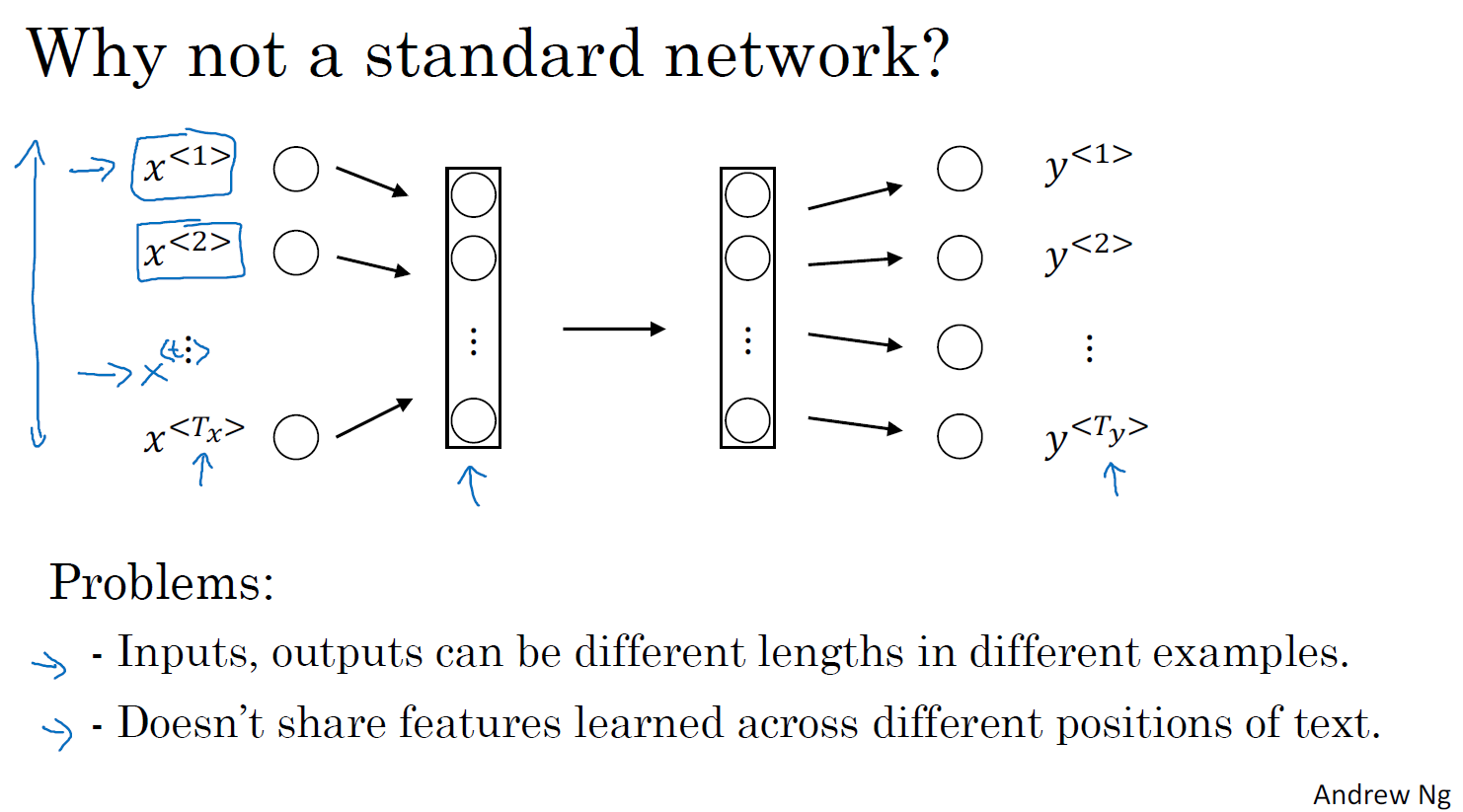

문장을 일반 신경망으로 학습하지 못하는 이유는 인풋과 아웃풋의 크기가 일정하지 않고, 한 단어의 중요도가 문장마다 다르기 때문에 특징을 뽑기 어렵다는 점입니다.

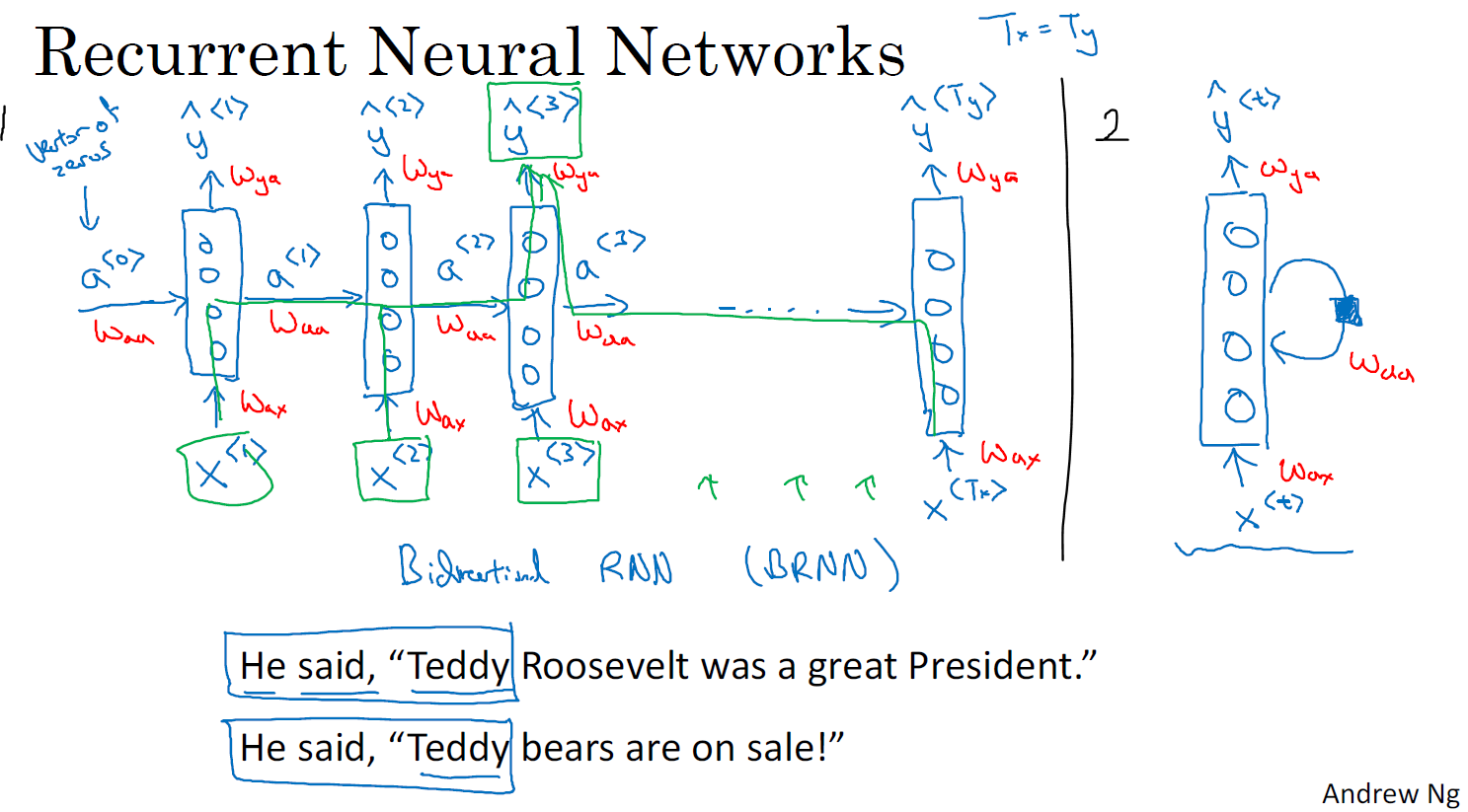

순환신경망을 다이어그램으로 표현하면 아래와 같다. 보통 오른쪽(2번)과 같이 표기하지만 왼쪽(1번)이 더 직관적으로 이해하기 쉽다. 문장에서 한 단어(x_t)씩 신경망의 인풋으로 넣는데 그 전 단계의 결과(a_t)를 함께 넣어준다.

이전의 값을 넣어줌으로써 y_<3>를 구하는 과정에 x_<1>, x_<2>, x_<3>가 모두 영향을 주게 된다.

여기서(RNN) 문제점은 x_<1>, x_<2>, x_<3> 는 y_<3>에 영향을 주지만 x_<4>, x_<5> 등의 뒤에 있는 단어들은 고려하지 못한다는 점이다. 아래 Teddy 예제를 보면 앞의 정보로만 Teddy가 곰인형인지 대통령인지 알 수 없다.

위의 과정을 깔끔하게 표현하고 a와 y에 대한 자세한 식을 구하면 다음과 같다.

이를 더 간단하게 표현해보면 아래와 같다. a와 x를 쌓아서 묶음으로 표현하는 것이다.

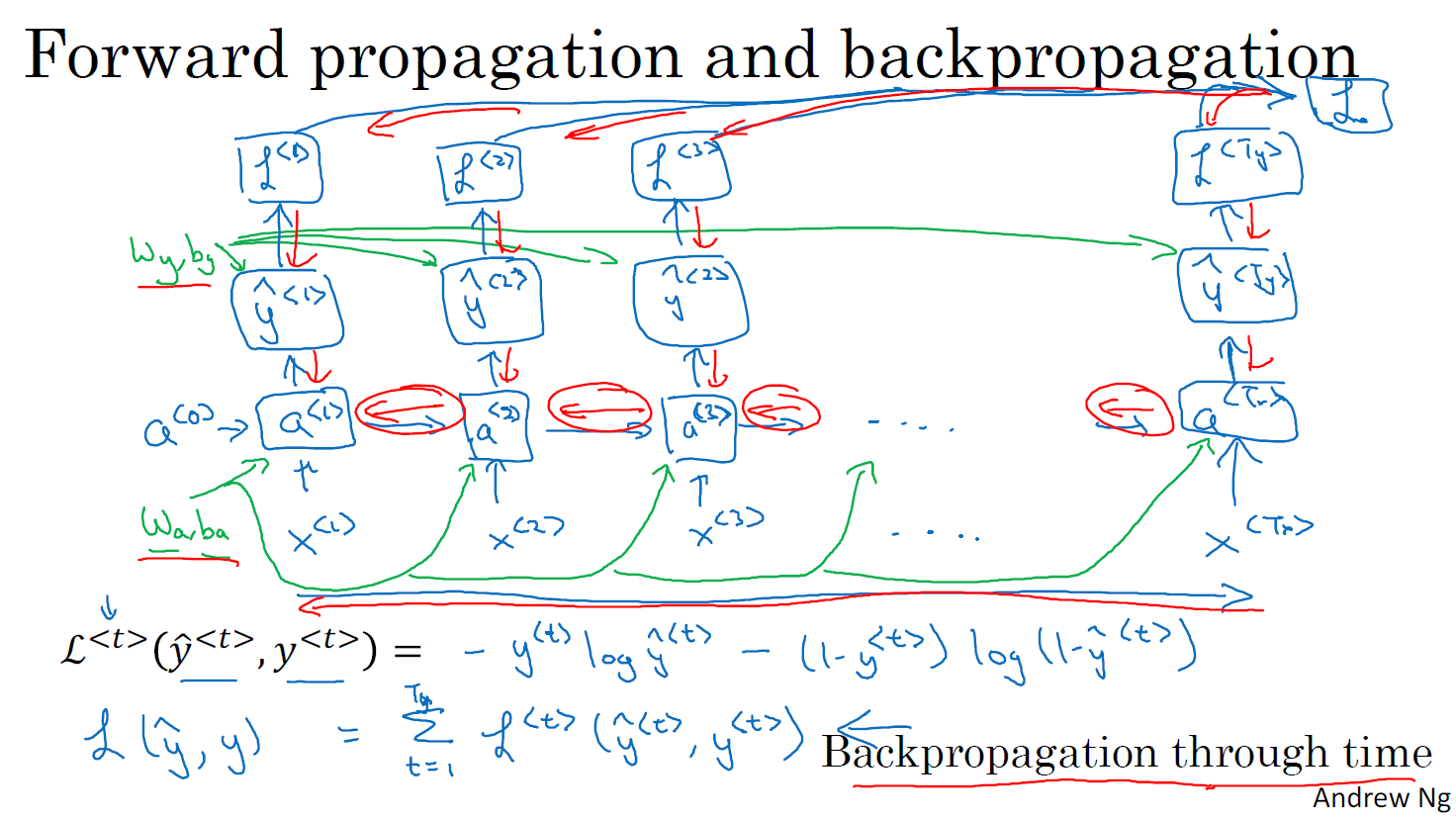

4. Backpropagation through time

logistic regression loss 방법 중 Cross entropy를 사용한 backpropagation 과정을 나타낸 것이다.

RNN의 주요 motivation은 input sequence의 길이와 output sequence의 길이가 같다는 점(Tx == Ty)이다.

5. Different Types of RNNS

RNN에는 Tx = Ty인 many-to-many 케이스도 있고 Tx != Ty인 many-to-one 케이스도 있다. 전자의 예시로는 언어 번역하는 경우가 있고, 후자 예시로는 영화 평론을 평점으로 변환하는 경우가 있다.

이 뿐만 아니라 one-to-one, one-to-many 등등 아래와 같은 다양한 종류의 RNN이 존재한다.

6. Language Model and Sequence Generation

댓글