↓↓↓↓↓↓↓ 아래 포스트에서 이어지는 글입니다. ↓↓↓↓↓↓↓

1. 내 데이터로 객체 인식 학습시키기 Object Detection with Custom Dataset :: tensorflow

Jetson Nano에서 Yolo를 이용해서 object detection을 수행했더니 너무 느리더라고요,,, FPS가 10도 안 나오는 것 같아요,,, 그래서 찾아보니까 SSD Mobilenet 이 젯슨 나노에서 빠르게 잘 돌아가는 예제를 보

seoftware.tistory.com

4. Evaluation

▶ eval.py 파일 실행

$ python research/object_detection/lecacy/eval.py \

--logtostderr \

--pipeline_config_path=ssd_mobilenet_v2_coco.config \

--checkpoint_dir=train \

--eval_dir=eval

object_detection 폴더가 아닌 legacy 폴더 아래에 eval.py 파일이 있습니다.

eval.py 파일도 은근 오랫동안 돌아요. 작업이 끝나면 아래와 같이 학습시킨 클래스에 대한(저는 dog, escooter 등)에 대한 정확도가 나옵니다.

5. Model Export

▶ 훈련이 끝난 모델 추출합니다

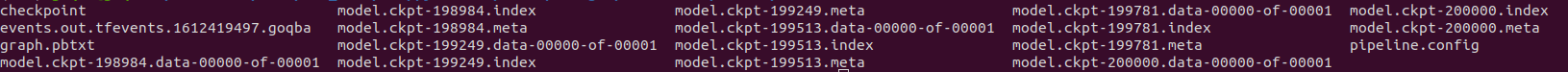

훈련이 끝난 후 train 폴더는 다음과 같은 파일들이 생겼습니다.

$ mkdir fine_tuned_model

$ python research/object_detection/export_inference_graph.py --input_type image_tensor --pipeline_config_path ssd_mobilenet_v2_coco.config --trained_checkpoint_prefix train/model.ckpg-20000 --output_directory fine_tuned_model

위에 노랜색으로 표시한 부분은 각자 다를 수 있습니다. train 모델 안의 가장 큰 숫자를 써주시면 됩니다. 파일명 뒤에 .meta .index .data 등은 입력하지 않습니다.

6. 학습시킨 모델로 Object Detection!

object_detection_tutorial.ipynb 파일이 models/research/colab_tutorials 아래에 있습니다. jupyter notebook을 열어서 노트북으로 실행을 해보셔도 되고, 저는 아래 블로그에서 파이썬(.py) 파일로 변환해 놓은 것을 사용했습니다.

m.blog.naver.com/sogangori/221212503947

Tensorflow Object Detection API 설치, 학습

목표 1. 구글의 텐서플로 공개 프로젝트 중에서 Object Detection API 를 내 PC에 설치하자. 2. Re...

blog.naver.com

위 블로그에 있는 코드에서 tensorflow 2.* 버전에 맞춰서 코드 약간을 수정했습니다.

Here라고 표시된 곳을 자기 경로에 맞게 바꾸어 주면 됩니다.

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

from object_detection.utils import ops as utils_ops

if tf.__version__ < '1.4.0':

raise ImportError('Please upgrade your tensorflow installation to v1.4.* or later!')

from utils import label_map_util

from utils import visualization_utils as vis_util

# What model to download.

MODEL_NAME = 'ssd_mobilenet_v2_coco_2018_03_29'

MODEL_FILE = MODEL_NAME + '.tar.gz'

DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = '../../fine_tuned_model/frozen_inference_graph.pb' # Here

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = '../../annotations/label_map.pbtxt' # Here

NUM_CLASSES = 21 # Here

opener = urllib.request.URLopener()

opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

tar_file = tarfile.open(MODEL_FILE)

for file in tar_file.getmembers():

file_name = os.path.basename(file.name)

if 'frozen_inference_graph.pb' in file_name:

tar_file.extract(file, os.getcwd())

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.compat.v1.GraphDef()

with tf.compat.v2.io.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# For the sake of simplicity we will use only 2 images:

# image1.jpg

# image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

# test_images 폴더 아래에 image3.jpg로 저장하고 range를 변경해주세요

PATH_TO_TEST_IMAGES_DIR = 'test_images'

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(1, 4) ]

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.compat.v1.Session() as sess:

# Get handles to input and output tensors

ops = tf.compat.v1.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.compat.v1.get_default_graph().get_tensor_by_name(tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.compat.v1.get_default_graph().get_tensor_by_name('image_tensor:0')

# Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

print ('img',image_path)

plt.savefig(image_path[:-3]+'png')

저는 전동킥보드(e_scooter)도 클래스 중에 있었는데 위의 코드로 검출(Detecting)이 잘 되는지 확인해보았습니다.

▶ 원본

▶ 결과물

'개인 공부 > 인공지능, 딥러닝' 카테고리의 다른 글

| 1. 내 데이터로 객체 인식 학습시키기 Object Detection with Custom Dataset :: tensorflow (8) | 2021.02.05 |

|---|

댓글